Loadtesting for Open Beta, Part 4: Done optimizing the lobbyserver!

Check out Loadtesting for Open Beta, Part 1, Part 2, and Part 3 to read the previous installments of this epic tale!

It’s been a while since the last update in this series, sorry about that! At the end of Part 3, I mentioned the SimCity launch giving me pause about my goal of testing the SpyParty lobbyserver to 1000 simultaneous robots. Well, I got scared enough after their launch that I increased my optimization target to 2000 simultaneous robots on my old and slow server, and then I also decided to bite the bullet and upgrade the server hardware after I hit 2000 to give myself some extra headroom. I really don’t think I’m going to hit these numbers at Open Beta launch or even for a long time after that, but I’d rather err on the conservative side and have it purr along nicely.

Since I waited so long to post this Part 4, I can’t really give a play-by-play of all the optimizations I did as they happened, so I’m going to give the general arc I followed, and then talk about some of the interesting stops along the way.

iprof, atop, oprofile, et al.

As I mentioned at the end of the last post, I’d fixed some of the huge and obvious things with the network bandwidth usage, so it was time to start profiling the CPU usage. There are lots of different kinds of profilers, but the one I use the most is based on Sean Barrett’s iprof. I’ve modified it a fair bit over the years,1 but the core of the system is still the same. It’s a runtime profiler that requires instrumenting your code into blocks, it’s efficient enough that you can leave it on all the time as long as you don’t stick a “prof block” in an inner loop, and you can generally see where you’re spending your time hierarchically. It can draw to the screen, but I also have it output to a string, and so on the lobbyserver I can have it output to the log after a spike, and also catch a signal I send and it’ll force a prof dump. Here’s an example:

2013/04/17-16:12:10: 85.156 ms/frame (fps: 11.74) sort self - current frame

2013/04/17-16:12:10: zone self hier count

2013/04/17-16:12:10: ProcessMessages 59.2910 59.2910 1.00

2013/04/17-16:12:10: +Send 17.8164 18.4493 1120.69

2013/04/17-16:12:10: +ClientsUpkeepAndCloseLoop 2.3034 2.6989 793.15

2013/04/17-16:12:10: Log 1.3559 1.3559 25.97

2013/04/17-16:12:10: unpack_bytes 0.7311 0.7311 26.56

2013/04/17-16:12:10: unpack 0.6674 0.6674 3551.71

2013/04/17-16:12:10: +ClientsPacketLoop 0.5492 3.7160 792.58

2013/04/17-16:12:10: +ClientsUpdated 0.4023 10.9843 0.56

2013/04/17-16:12:10: +SendQueuedClientRoomMessages 0.2524 7.3086 1.00

2013/04/17-16:12:10: FindClientByID 0.2494 0.2494 267.05

2013/04/17-16:12:10: +JournalQueuedSave 0.2374 0.3882 1.43

2013/04/17-16:12:10: +Tick 0.2112 25.6313 1.00

2013/04/17-16:12:10: iprof_update 0.2051 0.2051 1.00

2013/04/17-16:12:10: +JournalSavePrep 0.1560 0.5442 1.43

As you can see, it’s pretty easy to read, and you can drill down on individual blocks and see who calls them and who they call:

2013/04/17-16:12:10: 85.156 ms/frame (fps: 11.74) sort graf - current frame

2013/04/17-16:12:10: zone self hier count

2013/04/17-16:12:10: LoginReply 0.0006 0.0006 0.01

2013/04/17-16:12:10: JOINING 0.0007 0.0008 0.03

2013/04/17-16:12:10: +TYPE_CLIENT_GAME_ID_REQUEST_PACKET 0.0011 0.0014 0.44

2013/04/17-16:12:10: +NewWaitingForJoinClients 0.0019 0.0019 0.01

2013/04/17-16:12:10: +TYPE_CLIENT_PLAY_PACKET 0.0086 0.0087 0.12

2013/04/17-16:12:10: +TYPE_CLIENT_INVITE_PACKET 0.0213 0.0225 2.37

2013/04/17-16:12:10: +TYPE_CLIENT_IN_MATCH_PACKET 0.0280 0.0282 0.49

2013/04/17-16:12:10: +JournalQueuedSave 0.0563 0.0571 1.18

2013/04/17-16:12:10: +RoomsChanged 0.0852 0.0939 15.38

2013/04/17-16:12:10: +NewInLobbyClients 0.1155 0.1342 14.55

2013/04/17-16:12:10: +ClientsUpkeepAndCloseLoop 0.1362 0.2093 150.55

2013/04/17-16:12:10: +TYPE_CLIENT_MESSAGE_PACKET 0.3122 0.3170 8.87

2013/04/17-16:12:10: +SendQueuedClientRoomMessages 6.7122 7.0013 501.99

2013/04/17-16:12:10: +ClientsUpdated 10.3361 10.5720 424.67

2013/04/17-16:12:10: -Send 17.8164 18.4493 1120.69

2013/04/17-16:12:10: unpack 0.6329 0.6329 3362.06

This is super useful. The biggest downside to it is that it’s not thread-aware, but I’ve made it thread-safe via the brute force method of having it ignore all threads that aren’t the “main”. My code is mostly single-threaded, but the threadedness increased a fair bit during these optimizations, so I hope to eventually modify iprof to be thread-aware without losing too much simplicity and performance. However, until I make those modifications, any background thread activity will show up attributed to one of these main-thread blocks. You can still get useful data, you just have to be aware of this. For example, ProcessMessages in the loop above is hiding a WaitForMultipleObjectsEx call on Windows, or a call to select/epoll on POSIX, so it’s not actually taking that much active time on the main thread.

I also used oprofile, which is a nice sampling profiler on Linux that can profile per-thread using just the debug information in an application, and atop for keeping track of things happening on the machine as a whole.

Here’s a list of the stuff I ended up optimizing:

- I was originally sending out the chat messages to all clients as they came in, but I started queuing them up and sending them all out at once to reduce send calls. Of course, once you do this, you have to make sure you don’t overflow the network packet if you have queued a lot of messages that tick, so that makes the code more complicated and harder to modify, which is a tradeoff one often has to make while optimizing, and it’s why you want to put off most optimization until you need it…although you should have a rough plan for how you’ll optimize a piece of code in the future even if you write it the dumb way first.

- I made more threads, including putting network sending and receiving on separate threads, making a separate thread for logging, and a thread for saving files to the disk. There were already threads for talking to the database and Kerberos, for receiving network packets, and for checking for new client builds. These are all relatively simple threads to add, because they’re all just throwing data into a queue on one thread and taking it out on another, although multithreading a program always makes it harder to understand. I discovered a fair number of deadlock bugs in libjingle, the library I’m using for NAT traversal and some cross platform threading stuff, and I’ve fixed some of them. I’ve veered far enough from the original libjingle code that I’m probably just going to have to put my version up as a fork, sadly.

- I timesliced the login phase for the clients. Previously, when a client would log in, I’d process a bunch of stuff immediately, including some authentication stuff which can be somewhat time consuming. In a load test where hundreds of clients log in to the server at the same time, this would bog down, so I now process a maximum of 20ms worth of clients each tick. This makes some clients wait a bit longer before they’re logged in, but doesn’t result in a positive feedback loop where there’s a really long tick, so a lot of packets will have arrived while it was happening, so the next tick is really long too, etc.

- Like the player list packets, I also made the room list packets incremental, and able to span multiple network packets. This way all the lists of players and rooms that the lobby sends to the clients can be differential and arbitrarily long, so there’s no more hard limit on the number of clients that can join the lobby. I think there’s actually a bug in this code, but I’ve only ever seen it once, even after tens of thousands of robot sessions, so I just hope it shows up more at some point.

- I switched the POSIX networking inner loop in libjingle from select to epoll. This was not so much an optimization as it was simply to allow more than 1024 sockets to work at all. epoll is also a lot faster, but I’m currently kinda using it in a dumb way, so I’m not benefiting from that speed boost much yet.

- There were also a bunch of smaller traditional code optimizations, like using maps to cache lookups, using free lists to avoid some allocations, and whatnot. Oh, and don’t forget to change the ulimit -n settings in limits.conf on Linux, so your process can actually accept a lot of connections!

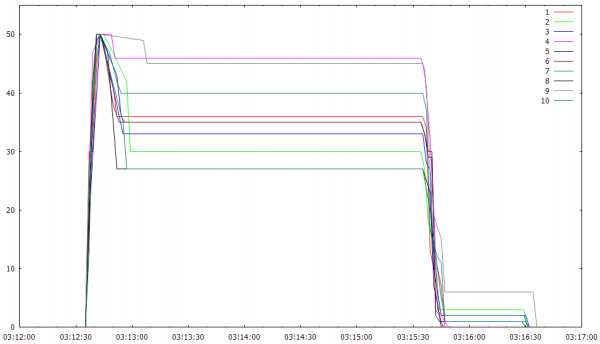

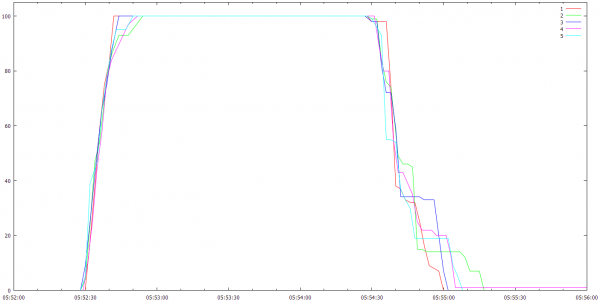

As I was doing these optimizations, I would run a loadtest with a bunch of robots and profile the lobby. I was at 500 robots at the end of Part 3, and I slowly raised the ceiling as I improved the code over the weeks: 569 robots…741 robots…789 robots, 833, 923, 942, 990, 997, 1008, 1076, 1122, 1158, 1199, 1330, 1372, 1399, 1404, 1445, 1503, 1614, 1635, 1653, 1658, 1659…

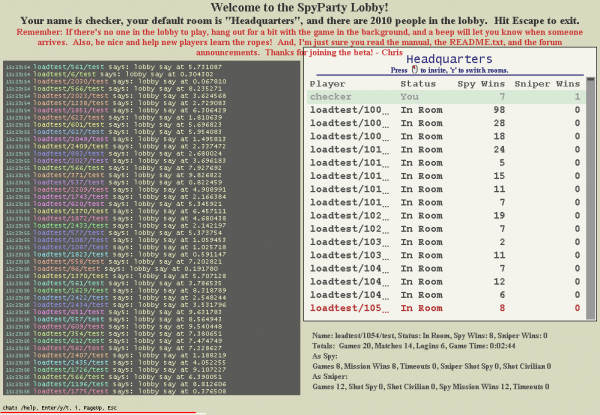

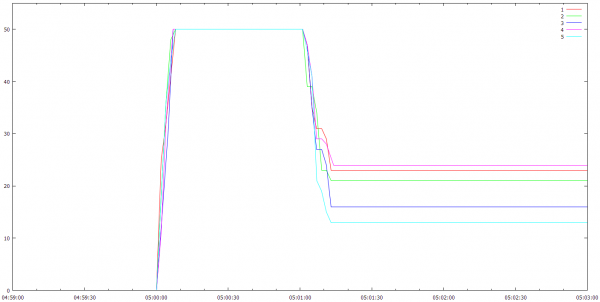

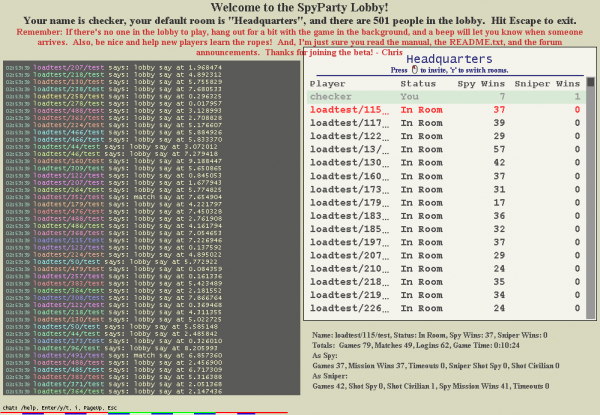

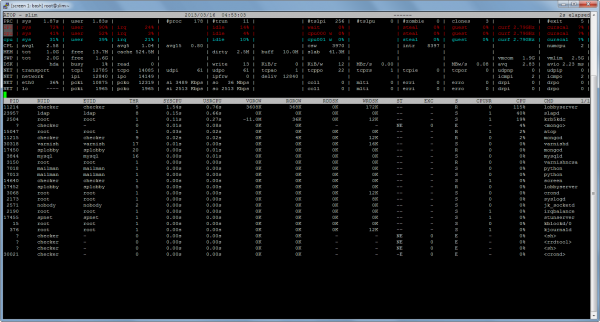

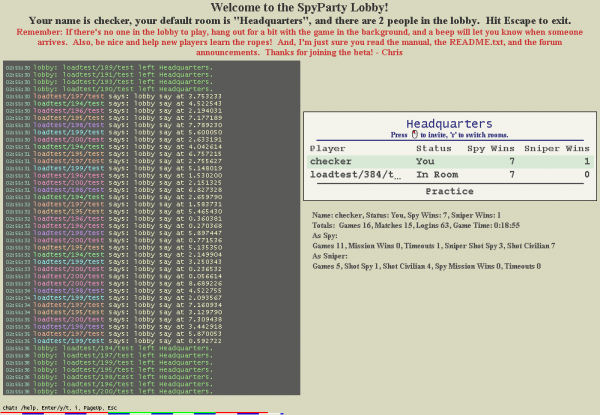

When I hit 1659 it was late one night, and so I stopped for the day. When I resumed work and did the next couple of optimizations, I figured I’d get it to 1800 or something. I always launched 20% or so more robots than I was hoping to support in a given test to account for internet and EC2 variation, and for plain old bugs in the clients that would sometimes manifest themselves, so this time I must have launched 2500 robots, because when I looked up from the profiles running in ssh terminals and over to my SpyParty client logged into the test lobby, I saw this:

Uh, I guess I was done optimizing?

I was actually kind of disappointed, to be honest. I had all sorts of cool ideas for optimizations I was planning to do that I’d come up with while testing and profiling the code, and now, if I was going to follow my own plan and stop when I hit 2000 simultaneous robots, I would have to just take a bunch of notes for next time I optimized so I could pick up where I left off, and move on. The good news is I’m pretty sure I can make the lobby almost twice as efficient if and when the time comes to do that!

Room at the Inn

If you look closely at that screenshot, you’ll see the thumb on the scrollbar for the player list is pretty small. That’s because all 2010 players are in a single room, which is not going to work very well for a lobby full of real people. In fact, the only reason there were 2010 players in that room was because at the time I’d limited the room size to 2010 because I didn’t want to bother teaching the robots how to use rooms. There were actually a few hundred more robots knocking on the door but they couldn’t get in. But, now that I’d hit my 2k target, it was time to fix that.

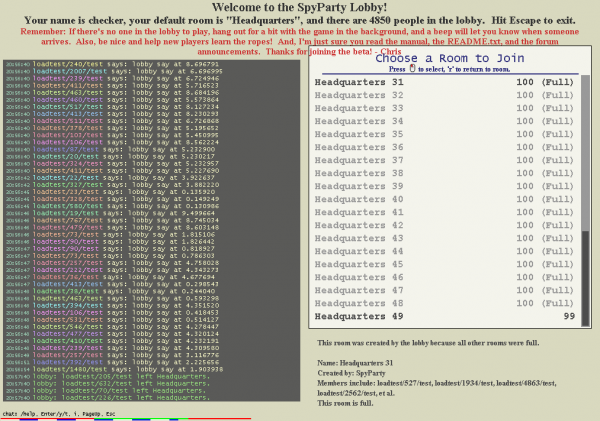

I immediately realized I had a problem. Currently, when you connect to the lobby, it sends you a list of rooms, and you have to pick one to log in. But, what if the rooms are full? Oops, you couldn’t log in. So, as soon as I set the room size down to something more reasonable, like 100, then the first 100 robots got in and the rest just sat there failing to join.

It seemed like there were a number of solutions to this problem, including allowing players to create new rooms before logging in, but in the end I went with the simplest and most robust solution, which is to have the lobby create a new empty room if all the current rooms are full. The initial room is always called Headquarters, so I named these new dynamic rooms Headquarters 2 and onward. Very creative, I know. Somebody suggested using spy movie titles for these room names, but I figured that wouldn’t scale very well, ignoring the potential copyright issues. If the lobby ever finds one of these dynamic rooms empty, it kills it, unless all the other rooms are full. I also have the lobby automatically put you in a now-guaranteed-to-exist-non-full-room if you log in and try to join a full room, even if it wasn’t full when you clicked on it, so this eliminated a login race condition too, which is always a good sign.

This last bit also made it so I didn’t need to make the loadtesting robots know very much about rooms: they always try to join Headquarters and if they don’t end up there, oh well. As they join, they kind of spill over into the latest dynamic room until it fills up, and then they continue to the next, kind of like filling up an ice tray with water from one end. I should probably make them test the actual room features by creating and changing rooms and whatnot, but the single giant 2010 player room was a way more intense loadtest than having 20 rooms with 100 players in each due to the chat broadcasting.

I don’t know if 100 is the right limit for room populations. 100 would still be way too many people to have in a single reasonable conversation, but I didn’t want to put too low of a limit on the size before I have tested things with humans instead of just robots.

The Client

There’s this annoying thing that happens when you’re testing computer code, and it’s that you encounter problems and bugs not only in the code you’re trying to test, but also in your test code. This was no different. I was constantly fixing various bugs in the robots that would keep them from all connecting correctly, and I even made sure some of the optimizations helped the client side so I could run more robots on a given EC2 server. Plus, just making sure the robots keep trying to connect and login was important, because if there was a timeout due to an initial burst, you want them to try again automatically after it dies down, rather than just sitting there not doing anything.

As I said in Part 1, I started out running about 50 robots on each m1.small EC2 instance. That didn’t scale, for some reason I’m still trying to figure out. That worked okay with a low number of instances, but as I increased the number of instances, I had to lower the number of robots on each instance, eventually to around 20 per m1.small. An AWS account starts with only being able to start 20 instances, so I did a total of two instance limit requests to Amazon, first to 100 and then to 300. It’s scary to have 300 instances running…even though m1.small instances are only 6¢ an hour each, that’s still $18 an hour when there are 300 of them running, and Amazon rounds up to the hour, so if you miss shutting them down by a minute you just lost a large pizza! It looks like Google’s new Compute Engine thing is about twice as expensive for their somewhat similar low end machine (ignoring performance differences), but charges in 1 minute increments after the first 10, which might be cheaper for this very transient use-case.

I seem to remember reading somewhere that Amazon allocates instances for the same account to the same physical machine if possible, which might explain this scaling problem, since it means I was probably maxing out a given piece of server hardware with too many instances bursting at the same time. It’s hard to tell if this is the case, and I need to do more testing before saying for sure. A commenter said there might be a packets-per-second limitation in EC2, as well, but I haven’t verified that. Once I’ve tried a few different things, I’ll do a long technical post on chrishecker.com about EC2, linode, and my dedicated host machine, comparing the different results I got.

Finally, I had to do some optimization on the SpyParty game client when the numbers started getting high. I went a little nuts with the chat system early on and it has completion on all commands, room names, and player names, but the code that builds the completion tree was calling the memory allocator 35k times per update when the numbers of players got high, so I had to remove some of the stupid in that code as well.

The New Server

With all that done, and 2010 robots running on the old server, I haggled with my hosting provider and started renting a newer and much faster server. I use SoftLayer for dedicated hosting, and have for years.2 My old server was a Pentium 4 with a single hyperthreaded core, 1GB ram, and a 100Mbps uplink, and the new server is a Xeon 3460 with four hyperthreaded cores, 4GB ram, and 1Gbps uplink, so it’s slightly more expensive but a lot faster. That said, everybody seems to be using VPS hosts these days. I talked to some other indie game developers, but I didn’t have time to do a full evaluation of the tradeoffs, so went with the devil I knew, so to speak. It seems like VPS is going to be a bit slower but also a bit cheaper, but the big advantage of VPS to me is that you can move the virtual machine image to faster hardware and have it up and running again in minutes. That’s a pretty great scaling sweetspot between having a single physical server and praying it doesn’t melt, and a scalable system that elastically uses cloud computing The Right Way™, but it’s also hundreds of times easier to get a VPS image working and then move it to a faster machine than it is to scale elastically. So, I dunno, it’s definitely something worth looking into more during the year as I see how things are scaling.

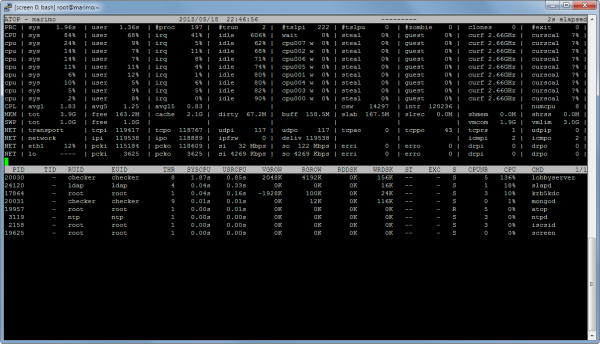

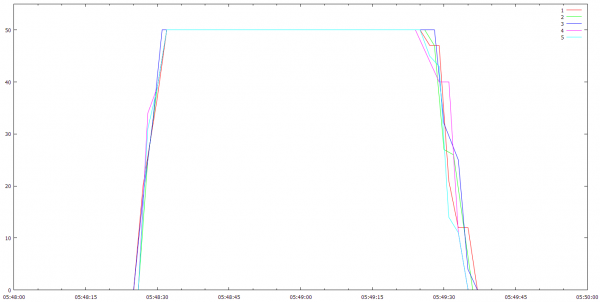

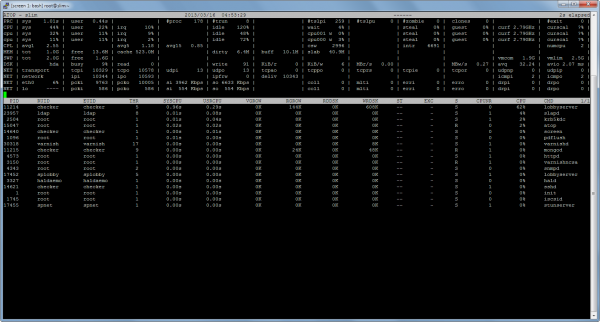

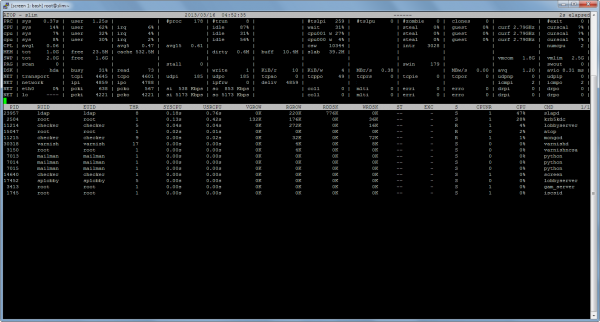

The new server ate the robots for lunch:

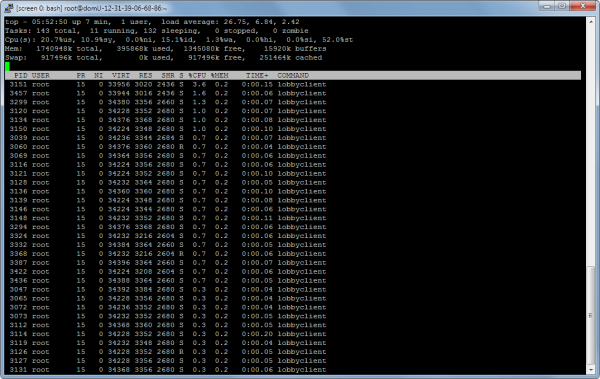

For reference, 4850 simultaneous players is pretty far up the top 100 Steam games by player count, so I don’t think I have to worry about those numbers for a while. Here’s atop’s view of things:

What’s Next?

So, that’s it for the lobbyserver loadtesting. Now I need to move the website and registration system over to the new server, test them a bit, and start inviting everybody in in big batches. Soon I’ll send out email to the beta testers to set up some scheduled human loadtests as well. The robots will be jealous, left out in the cold, looking in at all the humans actually playing the game.

Open Beta is fast approaching.