Loadtesting for Open Beta, Part 1

Way back in 2011, right before I opened up Early-Access Beta signups, I loadtested and optimized the signup page to make sure it wouldn’t crash if lots of people were trying to submit their name and email and confirm their signup. I always intended to write up a technical post or two about that optimization process because it was an interesting engineering exercise, but I have yet to get around to it. However, I can summarize the learnings here pretty quickly: WordPress is excruciatingly slow, Varnish is incredibly fast, I ♥ Perl,1 Apache with plain old mod_php (meaning not loading WordPress) was actually way faster than I expected, slightly faster even than nginx + php-fpm in my limited tests, CloudFront is pretty easy to use,2 and even cheap and small dedicated servers can handle a lot of traffic if you’re smart about it.

Like with any kind of optimization, Assume Nothing, so you should always write the loadtester first, and run it to get a baseline performance profile, and continue running it as you optimize the hotspots. When I started, the signup submission could only handle 2 or 3 submits per second. When I was done, it could handle 400 submissions per second. I figured that was enough.3 If more than 400 people were signing up for the SpyParty beta every second, well, let’s file that under “good problem to have”.

After all the loadtesting and optimizing, the signups went off without a hitch.

Loadtesting and optimizing the beta signup process was important, because the entire reason I took signups instead of just letting people play immediately was “fear of the unknown”. I couldn’t know in advance how many people would be interested in the game, and getting a couple web forms scalable in case that number was “a lot” was much easier than getting the full game and its server scalable, and that’s ignoring the very real need to exert some control over the growth of the community, to make sure the game wasn’t incredibly buggy on different hardware configurations or that there wasn’t some glaring balance issue, etc. Overall, starting with signups and a closed beta was great for the game, even if it’s meant frustrating people who signed up and want to play.

But it’s been long enough, and I’m now finally actively loadtesting and optimizing for opening the beta!

Lobby Loadtesting Framework

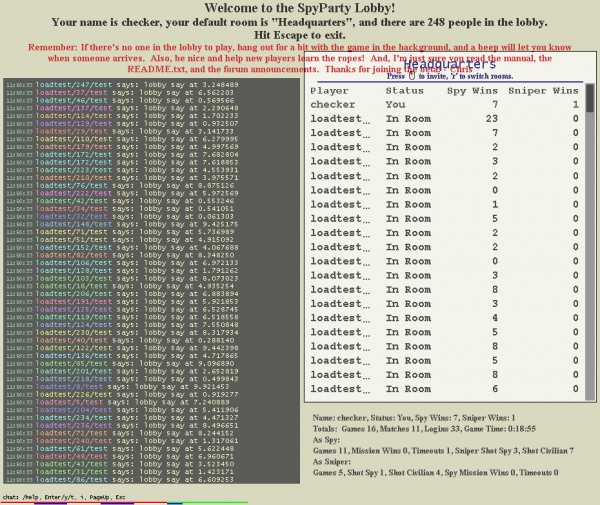

Like with the signup form, I’m loadtesting first. This will tell me where I need to optimize, and allow me to test my progress against the baseline. However, loadtesting a game lobby server is a lot more complicated than loadtesting a web form, so it’s a bit slower-going. I’ve had to create a robot version of the game client that logs into the lobby, chats, invites other robots to play, and then reports on the results of the fake games played. I build this on top of the game’s client interface, so it looks just like a real game to the lobby.

As with all testing, you need to make sure you aren’t Heisenberg-ing4 your results, so I wanted to get fairly close to the same load that would happen with multiple real game clients hitting the server. This means I had to have a good number of machines running these robots hitting the test lobby at the same time, and that means using cloud computing. I was inspired by the bees with machine guns article about using Amazon Web Services’s Elastic Compute Cloud (EC2) to launch a bunch of cheap http load testers. I use AWS for SpyParty already, distributing updates and uploading crashdumps using S3, so this seemed like a good fit. At first I tried modifying the bees code to do what I want, but I found the Python threading technique they used for controlling multiple instances didn’t scale well running on Windows, and since I wanted more control over the instances anyway and the core idea was not terribly difficult to implement, I wrote my own version in Perl, which I’m much more familiar with. The code uses Net::Amazon::EC2 to talk to AWS to start, list, and stop EC2 instances, and Net::SSH2 to talk to the instances themselves, executing commands and waiting for exit codes, downloading logs, and whatnot. I just use an existing CentOS EC2 AMI5 and then have the scripts download and install my robots onto it from S3 every time I start one up; I didn’t want to bother with creating a custom AMI when my files are pretty small. I’m going to post all the loadtest framework code once I’ve got it completely working so others can use it.

How Much is Enough?

In loadtesting the loadtesters, I found that an m1.small instance could run about 50 loadtest bots simultaneously with my current client code. I can switch to larger and more expensive EC2 instance types if I need to run more robots per instance, and as I optimize the server I’m pretty sure the client code will get optimized as well, which will allow more concurrency. Amazon limits accounts to 20 simultaneous EC2 instances until you apply for an exception, so I’ve done that,6 but even with that limitation, I can loadtest to about 1000 concurrent clients, which seems like more than enough for now.

I still don’t know exactly what to expect when I open up the beta, but I don’t think I’ll hit 1000 simultaneous SpyParty players outside of loadtesting anytime soon. If you look at the Steam Stats page, 1000 simultaneous players is right in the middle of the top 100 games on the entire service, including some pretty popular mainstream games with mature player communities. In the current closed beta, I think our maximum number of simultaneous players has been around 25, and it’s usually between 10 and 15 on any given night at peak times, assuming there’s no event happening and I haven’t just sent out a big batch of invites. I still have about 6000 people left to invite for the first time from the signup list, and 9000 who didn’t register on their first invite to re-invite, all of whom I’ll use for live player loadtesting after the 1000 robots are happily playing without complaints. I think the spike from those last closed invites will be bigger than the open beta release spike, unless there are a ton of people who didn’t want to sign up with their email address, but who will buy the game once the beta is open. I guess that’s possible, but who knows? Again, if we go over 1000 simultaneous, I guess I will scramble to move the lobby to a bigger server, and keep repeating the “good problem to have” mantra over and over again, but I’m betting it’s not going to happen and things will go smoothly.

After open beta there will be a long list of awesome stuff coming into the game, including new maps and missions, spectation and replays, the new art, and lots more, but once things are open it’ll be easier to predict the size of those spikes and plan accordingly. Eventually I’ll probably (hopefully?) have to move the lobby off my current server, but I’m pretty sure based on my initial testing that the old girl can keep things going smoothly a bit longer.

Initial Loadtesting Baseline

Okay, so what happens when I unleash the robots? Well, I haven’t let 1000 of them loose yet, but I’ve tried 500, and things fall over, as you might expect. It looks like around 250 is the maximum that can even connect right now, which is actually more than I thought I’d start out with.

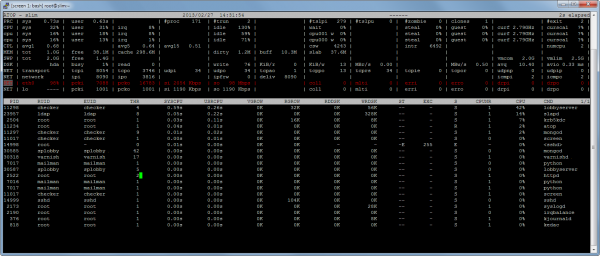

Things don’t work very well even with 250 clients, though, with connections failing, and match invites not going through.7 However, when I looked at atop while the robots were pounding on the lobby, a wonderful thing was apparent:

Neither the CPU utilization nor the memory utilization was too terrible, but the lobbyserver was saturating the 100 Mbps ethernet link! That’s awesome, because that’s going to be easy to fix!

Before I explain, let me say that the best kind of profile is one with a single giant spike, one thing that’s obviously completely slow and working poorly. The worse kind of profile is a flat line, where everything is taking 3% of the time and there’s no single thing you can optimize. This is a great profile, because it points right towards the first thing I need to fix, which is the network bandwidth.

My protocol between the game clients and the lobby server is really pretty dumb in a lot of ways, but the biggest way it’s dumb is that on any state change of any client, it sends the entire list of clients and their current state to every client. This is the simplest thing to do and means there’s no need to track which clients have received which information, and this in turn means it’s the right thing to do first when you’re getting things going, but it’s also terribly wasteful performance-wise compared to just sending out the clients who changed each tick. So, I was delighted to see that bandwidth was my first problem, because it’s easy to see that I have to fix the protocol. I’m guessing switching to a differential player state update will cut the bandwidth by 50x, which will then reveal the next performance spike.

I can’t wait to find out what it will be!8

Oh, and the total EC2 bill for my loadtesting over the past few days: $5.86

So…Open Beta?

Within weeks! Weeks, I tell you!

Oh, and as I’ve said before, everybody who is signed up will get invited in before open beta. I will then probably have a short “quiet period” where I let things settle down before really opening it up, so if you want in before open beta, sign up now.

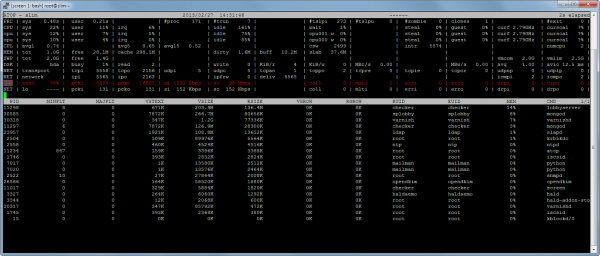

Update: Assuming More Nothing…Er, Less Nothing?

After posting this article, I was about to start optimizing the client list packets, when it occurred to me I wasn’t assuming enough nothing, because I was assuming it was the client list taking all the bandwidth. This made me a bit nervous, which is the right feeling to have when you’re not following your own advice,9 so I implemented a really simple bit of code that accumulated the per-packet send and recieve sizes, and printed them on exit, and then threw another 250 robots at the server for 60 seconds. The results validated the client list assumption, it’s by far the biggest bandwidth consumer, sending 1.6GB in 60 seconds.10 However, it did show that the lobby sending chat and status messages to the clients is also maybe going to be a problem, so yet again: measuring things is crucial.

| Packet Type | Total Bytes |

| TYPE_LOBBY_PLAYER_LIST_PACKET | 1632549877 |

| TYPE_LOBBY_MESSAGE_PACKET | 66687600 |

| TYPE_LOBBY_ROOM_LIST_PACKET | 9474937 |

| TYPE_CLIENT_INVITE_PACKET | 303056 |

| TYPE_CLIENT_MESSAGE_PACKET | 226779 |

| TYPE_CLIENT_LOGIN_PACKET | 157795 |

| TYPE_LOBBY_INVITE_PACKET | 131667 |

| TYPE_LOBBY_LOGIN_PACKET | 77951 |

| TYPE_KEEPALIVE_PACKET | 43032 |

| TYPE_CLIENT_GAME_JOURNAL_PACKET | 5478 |

| TYPE_LOBBY_PLAY_PACKET | 1888 |

| TYPE_LOBBY_WELCOME_PACKET | 1491 |

| TYPE_CLIENT_JOIN_PACKET | 1491 |

| TYPE_CLIENT_PLAY_PACKET | 836 |

| TYPE_CLIENT_IN_MATCH_PACKET | 713 |

| TYPE_LOBBY_IN_MATCH_PACKET | 532 |

| TYPE_CLIENT_CANDIDATE_PACKET | 490 |

| TYPE_LOBBY_GAME_ID_PACKET | 300 |

| TYPE_CLIENT_GAME_ID_REQUEST_PACKET | 30 |

It’s interesting that the clients are only sending 300KB worth of chat messages to the lobby, but it’s sending 66MB back to them, but 66MB is around 250 * 300KB, so it makes back-of-the-envelope sense. I’m probably going to need to investigate that more once I’ve hammered the player list traffic down. Maybe I’ll have to accumulate them every tick, compress them all, and send them out.

- See this thread for how I wrote the dynamic loadtesting form submission in a way that would saturate the network link. [↩]

- I use CF for images and other static stuff, with W3 Total Cache to keep them synced to S3, but I only use W3TC for this CDN sync, since Varnish blows it out of the water for actual caching. [↩]

- Let me be clear, I think 400 submissions per second is really pretty slow for raw performance on a modern computer, but web apps these days have so many layers that you lose a ton of performance relative to what would happen if you wrote the whole thing in C. For an interesting example of this, there’s a wacky high performance web server called G-WAN that gets rid of all the layers and lets you write the pages directly in compiled C. [↩]

- I just read on wikipedia that the uncertainty principle is often confused with the observer effect, and so on the surface this verbing of Heisenberg’s name isn’t correct, except he apparently also confused the two, so I’m going to keep on verbing. [↩]

- ami-c9846da0 [↩]

- although they haven’t gotten back to me so I guess I’ll apply again…sigh, customer service “in the cloud” Update: Woot! My limit has been increased, now I can DDOS myself to my heart’s content! [↩]

- Let’s ignore the lobby UI also drawing all over itself for now. [↩]

- You can see the CPU usage is pretty high relative to the memory usage, and seeing slapd and krb5kdc in there is a bit worrying, since that’s kerberos and ldap, which are used for the login and client authentication and are going to be a bit harder to optimize if they start poking their heads up too high, but both of them have very battle-tested enterprise-scale optimization solutions via replication, so worst-case is I’ll have to get another machine for them, I think. If the lobbyserver itself is still CPU-bound after fixing the bandwidth issue, then I’ll start normal code optimization for it, including profiling, of course. I’ll basically recurse on the lobbyserver executable! [↩]

- …let alone Mike Abrash’s advice! [↩]

- Or actually trying to send, since 1.6GB in 60 seconds is 200Mbps, which is not happening on a 100Mbps link! [↩]

248 people! I bet it’s like the matrix watching their messages fly by.

I like these technical posts. Gives me a better understanding of all the things you have to go through before the game is complete(?)

Oh sure, now you’re assuming that compression won’t take more CPU/memory resources that won’t bottleneck you in the process of reducing network bandwidth… :)

Not assuming anything! It’s definitely worth testing, though, assuming those messages become the top performance problem. We shall see!

Checker, have you considered that there is a bunch of people who didn’t purchase your game simply because they won’t be able to get their hands on it immediately? I know a couple of friends who is waiting for the beta to open up before purchasing the game, just to ensure a better playing experience and hopefully can get their hands on the game immediately.

Yeah, definitely, I just don’t know if there are more people that know about it and decided not to sign up and will all try to register for open beta, or if it’s a smaller number of people. No clue, hence the nerves!

I enjoy the blog posts like this – they’re excellent sources of information that might be useful to me if I ever get into multiplayer game development. I like The Witness’ posts for the same reason (but more geared to graphical solutions).

Thanks! I like doing these kinds of nitty gritty posts, whether about tech or small design problems.